PAISS19 AI Summer School

Posted on Fri 25 October 2019

PRAIRIE Artificial Intelligence Summer School personal notes

All the talks were amazing, but here I will focus on a few elements only:

- Yann LeCun - Energy-Based Self-Supervised Learning

- Alexei Efros - Self-Supervised Visual Learning and Synthesis

- Jean Paul Laumond - Mathematical foundations of robot motion

- more info coming soon

Yann LeCun - Energy-Based Self-Supervised Learning

The PAISS conference started with the talk of Yann LeCun about Energy-Based Self-Supervised Learning.

One of the goals of this conference is to show the current techniques and applications of machine learning which are a huge amount of successes but also to put these techniques into perspective compared to efficiency of the humans and animals’ world.

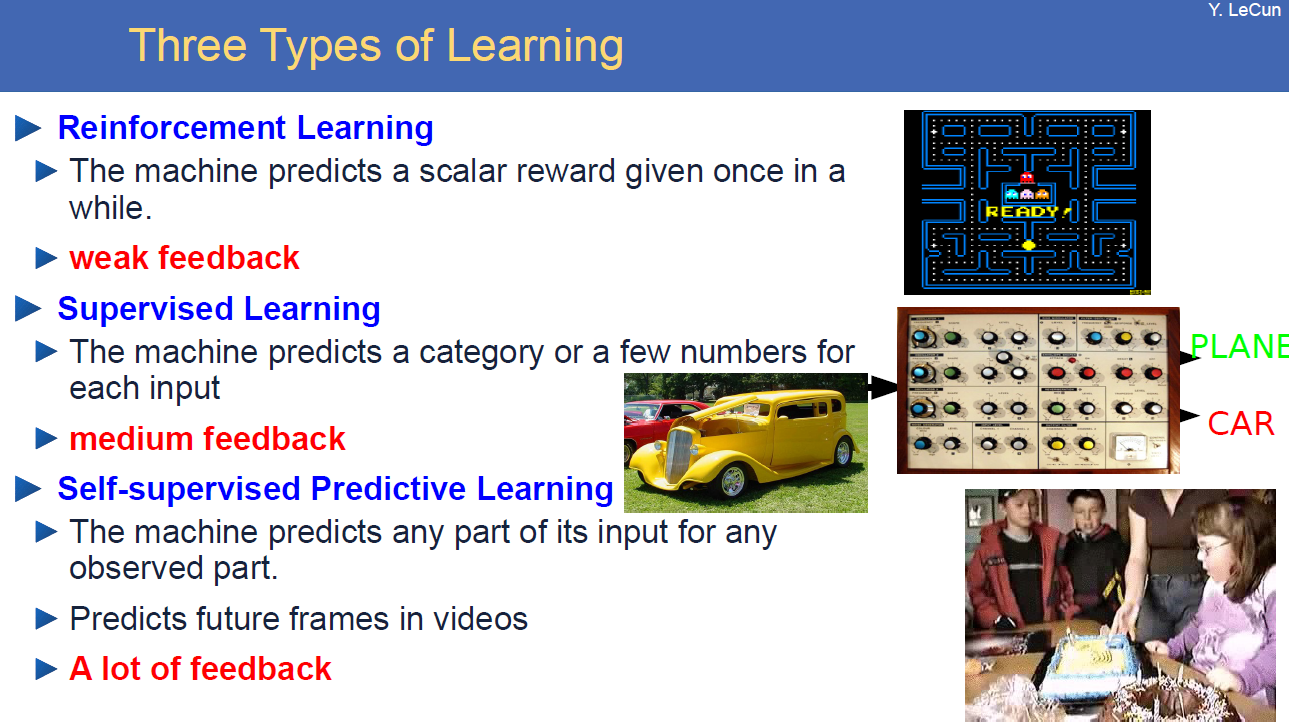

All the techniques that we use today are mostly dues to supervised learning (for NLP, image recognition, …), this thanks to labelled data.

For reinforcement learning (when you don’t tell the machine the correct answer but just tell it if it’s good or bad), there are a lot of applications for learning to play a game, they all use ConvNets, but the efficiency compared to humans is terrible ! It takes 83 hours

equivalent real-time (18 million frames) to reach a performance that humans reach in 15 minutes of play. [Hessel ArXiv:1710.02298]

This brings the issues with the reinforcement learning: it requires too many trials in the real world, so in a game or in a simulation, it is ok to do many trials (still expensive and time taking though) but it is not realistic nor practical in the real world because it can kill and damage and you cannot run the real world faster than real-time on many machines in parallel !

So, how come that humans do not need to drive many times into a cliff to learn that the car will fall and they’ll die ?

How do humans and animals learn so quickly?

It is not supervised and it is not reinforced, it is predicted. They learn to make predictions about their environment.

LeCun explains that if you show a 4 month-old baby a floating car, the baby says doesn’t seem to be giving any interest in it. But after 6 or 8 months of “unsupervised learning” they understand already basic laws of physics like that it is impossible for objects like toy cars to float in air. And they are amazed, they stare at the car with a surprised face because they have understood it goes against “physics laws” !

During this part, since it is a bit related to what I've done at INRIA, I was really expecting him to quote my master thesis supervisor Pierre Yves Oudeyer and the Flowers team’s work about intrinsic motivation, IMGEP curiosity, robotic playground. Or the work of Celeste Kidd.

And also something related to the way we learn and do predictions about our world with very few observation data is Stanislas Dehaene’s course at College de France “Le cerveau statisticien : la révolution Bayésienne en sciences cognitives”. He takes the example of the "tufas" of Josh Tanenbaum in bayesian models of human learning inferences which I find a very good and clear example.

(However, LeCun quoted Emmanuel Dupoux who worked with Stanislas Dehaene :) )

So "prediction" is the essence of the intelligence, it is how do we expect stuffs to happen, and this is non supervised. The future of AI will be driven by advances in reinforcement learning, as well as supervised and unsupervised machine learning but “The Revolution Will Not be Supervised.” (nor purely reinforced), as a reference to Gil Scott Heron’s song.

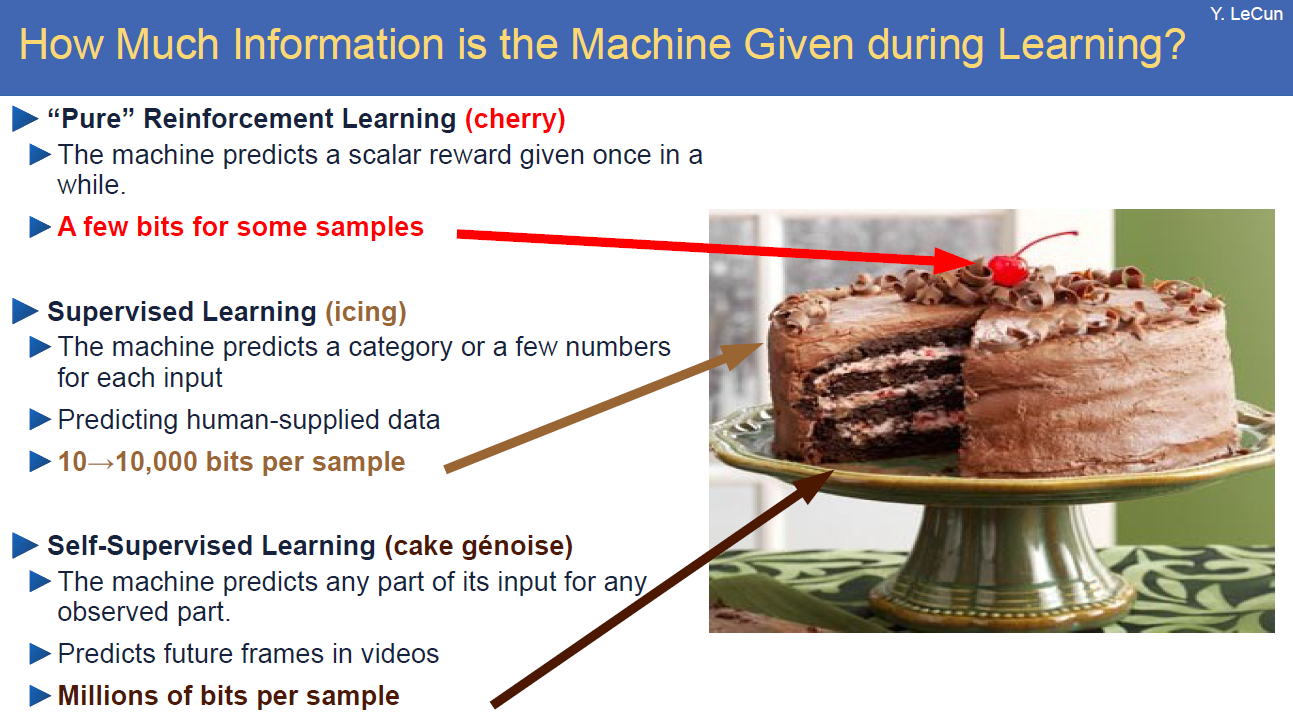

He then make the famous cake analogy:

“If intelligence was a cake, unsupervised learning would be the cake, supervised learning would be the icing on the cake, and reinforcement learning would be the cherry on the cake. We know how to make the icing and the cherry, but we don’t know how to make the cake.” — Yann LeCun

I now call it "self-supervised learning", because "unsupervised" is both a loaded and confusing term. (SSL fb post)

He made it clear that, while AI systems aren’t as smart as cats or infants, and there are many obstacles still to surmount, the future of AI is wide open.

Conclusion on what’s hype and what to remember:

- Self Supervised Learning is the future

- Hierarchical feature learning for low-resource tasks and massive networks

- Learning Forward Models for Model-Based Control/RL

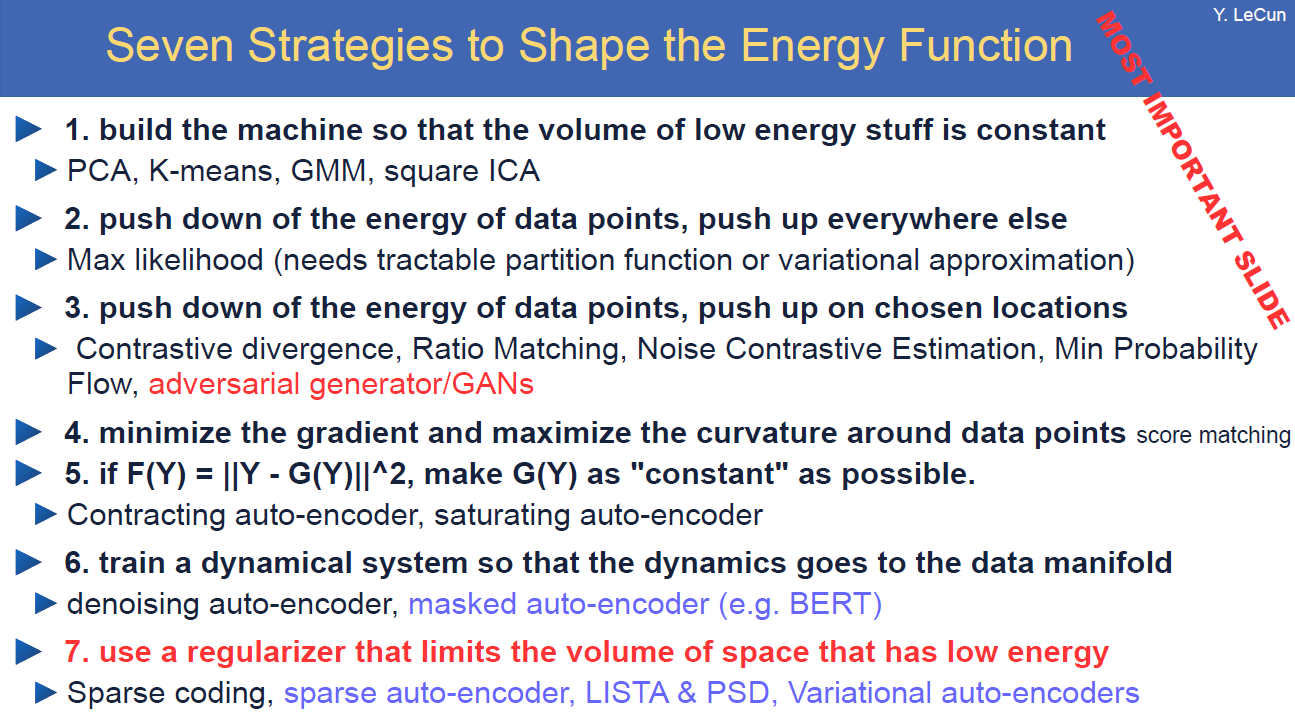

- Energy-Based Approaches

- Latent-variable models to handle multimodality

- Regularized Latent Variable models

- Sparse Latent Variable Models

- Latent Variable Prediction through a Trainable Encoder

- We are far from a complete solution and there is still a lot of work to be done in many domains

Alexei Efros - Self-Supervised Visual Learning and Synthesis

Jean Paul Laumond - Mathematical foundations of robot motion

More talks comming soon